The majority of data professionals understand the importance of data documentation, yet it often gets overlooked or doesn't receive the attention it deserves.

Why is this problematic?

Let’s start small: imagine you have started a library. In its humble beginnings it is a modest bookshelf with just a handful of books. With so few books, you don’t need a sophisticated system to keep track of them, maybe an excel sheet with a list of titles and their description and you can find what you’re looking for without much effort. But as your collection grows—spilling over into multiple shelves, and then multiple rooms—it starts becoming harder to manually track where each book is located let alone what the books are about. It quickly becomes apparent that your once-simple system is no longer enough.

What started as a manageable collection has grown into a chaotic mess because the cataloging system did not evolve alongside the library's size and complexity. As this collection expands into a massive library, navigating it without labels on the shelves or an index would be a nightmare, even for the most adventurous book lover. At this point (or sooner) would be a good time to implement something like the Dewey Decimal System. This system provided the appropriate solution for libraries to manage their vast and diverse collections.

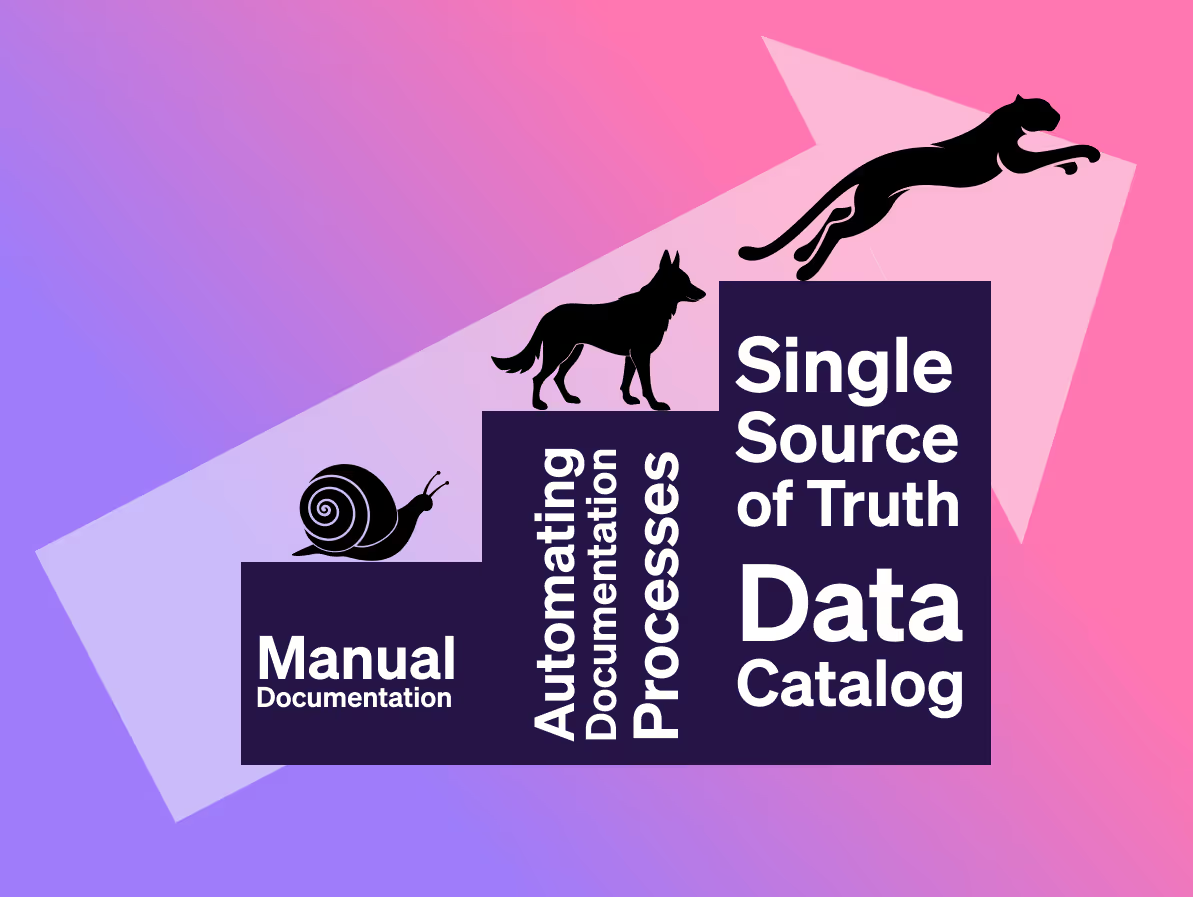

Think of your data as that growing library. A small collection of sources and tables is easy to manage, but as your data grows in size and complexity, you’ll need something more powerful, like the Dewey Decimal System for libraries, to keep everything organized and accessible. This means it's incredibly important to assess your data maturity to ensure that your data documentation evolves alongside your data, so you don’t end up with a disorganized, chaotic mess that hinders productivity and decision-making. But how can teams ensure their documentation processes evolve as their needs grow? In this article, we will cover the concept of the Data Documentation Maturity Curve, which illustrates what it means to crawl, walk, and run with your documentation.

Why Data Documentation Matters for Modern Data Teams

Just as with the library and a cataloging system, data documentation is not just a nice-to-have but a must-have. It provides structured records about your data, including metadata, lineage, and business definitions. When data documentation is done right it forms the backbone of data usability, reliability, and governance, ensuring that teams can trust and effectively use their data. Organizations will see:

Enhanced Collaboration: Documentation serves as a central resource and the single source of truth that all team members can access, promoting consistency and clarity in how data is understood and used. In its basic form, documentation might include simple descriptions of data fields and basic usage instructions while at its most robust implementation, it can offer comprehensive details such as data definitions, column level lineage, usage guidelines, validation rules, and data quality checks. Documentation ensures that whether team members need to understand the meaning of a data field, trace the origin of data, assess an impact of a change, trace the source of an error, find dataset SMEs, or verify its accuracy, they have all the information they need to work efficiently and cohesively.

Improved Data Quality: Well-documented data processes help identify and resolve data quality issues more efficiently.

Facilitate Decision-Making: With accurate and up-to-date documentation, the end user can make informed decisions faster and with more confidence. We love confidence in data!

Strengthened Governance: Documentation is key for auditing and compliance with data governance standards, reducing the risk of regulatory breaches.

The Data Documentation Maturity Curve

The Data Documentation Maturity Curve is a framework meant to help organizations assess their current documentation practices and plan for future growth. The Data Documentation Maturity Curve is divided into three stages: Crawl, Walk, and Run; with the thresholds for each stage based on the number of data sources, tables, and the size of the data team. We decided crawl, walk and run because they felt like a fitting analogy to the progressive nature of scaling your data documentation practices.

Caveats

While the maturity curve provides a general guideline for organizations to understand their current documentation needs and what will be required as they scale, it’s important to remember that these thresholds are not absolute—they should be adapted to the organization’s specific context, industry, and data maturity. Factors such as the complexity or criticality of data, like personally identifiable information (PII), might require more advanced practices earlier in the curve.

Maturity Stages

Crawl

The crawl stage is characterized by manual documentation, typically done through tools like Excel, Google Sheets, Confluence, or Notion. This approach can be practical for small-scale data operations that are just beginning to grow. At this point, you might have a limited number of data sources or tables and a very small team, possibly even just one person.

Documentation at this stage can vary in complexity, depending on the author's approach, but it usually provides an overview of data sources and tables. However, as the number of sources and tables increases, maintaining this documentation becomes increasingly unsustainable. A similar challenge arises when the data team starts to expand (we've all encountered that infamous Excel sheet passed around the team). Using this approach for data documentation means there is no version control and no enforceable quality control, making it difficult to maintain accuracy of your documentation as your operations grow.

It will become obvious as everything scales that sustainability and scalability quickly become significant challenges. Once you reach this step it will be time to start walking with your data documentation.

Walk

The crawl stage in data maturity involves automating documentation processes. dbt, either dbt Core or a managed dbt Core solution with Datacoves, is a great choice for managing growing datasets with increasing complexity. It provides a comprehensive overview of your project, including model code, a Directed Acyclic Graph (DAG) that clearly visualizes sources, models, and dependencies, as well as insights into tests and other crucial details—all available out of the box.

dbt documentation also includes metadata about your data warehouse, such as column data types and table sizes. dbt further simplifies the documentation process by allowing you to add descriptions to models, columns, and sources through a YAML configuration.

While dbt is a fantastic tool, it has limitations because dbt documentation is only focused on the transformations within dbt. As your data ecosystem becomes more complex, you might hit a wall with data documentation. For example, if you have projects using other tools for transformations, they won’t be included in your dbt documentation. Additionally, dbt docs do not integrate with metadata from other data adjacent tools such as your BI tool. dbt docs from dbt Core does not offer column level lineage.

Scalability can also become an issue as the number of dbt models increases. dbt docs becomes increasingly slow as the number of dbt models increases since it leverages a static website. Larger teams may also want to split a large dbt project into smaller ones, however, dbt docs will not handle cross project dependencies. When your team starts to face these issues it’s time to put on your running shoes.

Run

The ultimate goal of a data driven organization is to make data pervasive in decisions at every level. At this stage, documentation needs to serve as the single source of truth for all data-related questions meaning you need a system that not only documents transformations, like dbt, but also tracks data assets across the entire data ecosystem.

Additionally, when multiple teams are involved in the data lifecycle such as data engineers, analysts, and business users, documentation needs to be accessible and understandable to all. While dbt’s documentation is powerful for technical teams, it may not fully cater to the needs of non-technical users or be easily accessible to all stakeholders like a data catalog can be.

Additionally, at this stage we need to enrich metadata by multiple stakeholders. We may want business users to add glossary terms, data domains, different types of owners, data classifications, and when a description is not clear, update it. This is where a full-featured data catalog comes in.

Why a Data Catalog?

A strong data catalog is the answer to the complexities at this stage because it offers the following capabilities that will improve data management in your company:

Comprehensive Metadata Management: By combining metadata, audit logs, query history and more from many tools into one place, a data catalog such as Select Star gives you a comprehensive overview of your data environment and provides you a single source of truth. As seen in the crawl stage, human intervention is not scalable and error prone. That is why Select Star's automated metadata collecting and daily syncing is essential to guarantee that your data is up to date.

Advanced Search and Discovery: Finding the appropriate data assets can become difficult as data volume and complexity rise. Strong google-like search features in a data catalog make it readily available for users to find and comprehend data assets throughout the whole organization. Finding the most relevant datasets is made easier with Select Star's universal search tool, which prioritizes results based on data popularity.

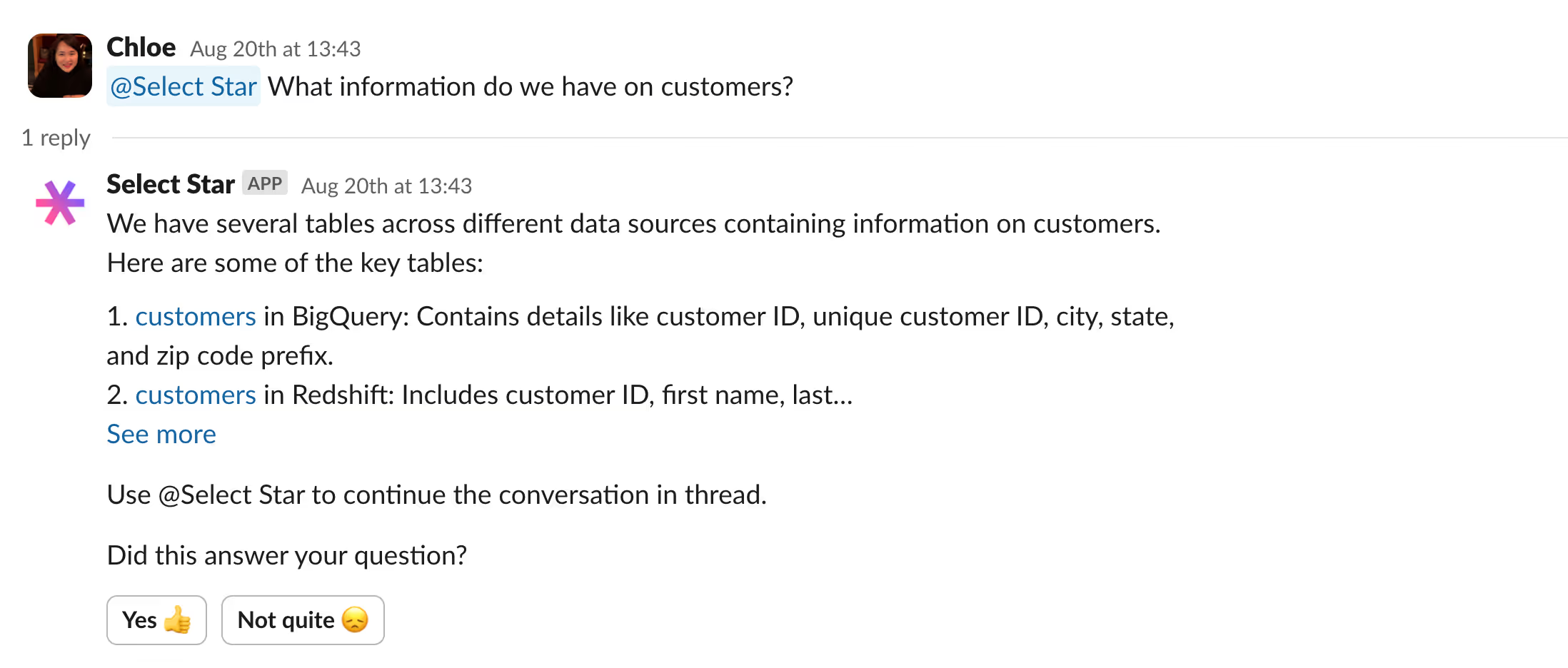

*Bonus* AI Docs: Select Star uses AI to simplify the life of data stewards. Their AI feature can automatically document your data and answer internal data questions on behalf of your data analysts. This is a true AI Co-pilot for your documentation!

Data Lineage and Impact Analysis: With complex data, knowing the history of data is essential. Tools like dbt may provide lineage but a data catalog can provide both cross platform table-level and column-level lineage by monitoring data flow and transformations throughout the whole data value chain. Users can visualize dependencies from raw data sources to final dashboards with Select Star's automatic data lineage features, which speeds up and improves the accuracy of impact analyses.

Collaboration and Documentation: Advanced collaboration features like tagging, commenting, and comprehensive documentation capabilities can be explored in a data catalog. These features make it possible for diverse teams to collaborate more successfully, guaranteeing that documentation is accurate and rich with perspectives from a range of stakeholders.

Automated Syncing and Collection of Metadata: Manually updating metadata in a complex environment is a no go. You need a data catalog to make sure that your documentation is up to date with the most recent state of your data. A data catalog automates the regular collection and synchronization of metadata from integrated systems to maintain your metadata with the least amount of manual labor possible.

Integration with Multiple Platforms: Using multiple tools and technologies, such as Snowflake, AWS Redshift, dbt, Tableau, PowerBI, and Airflow, is common in complex data environments. By facilitating a smooth interaction with various systems, a data catalog makes sure that all source metadata is combined and easily available in one place.

Data Quality Monitoring: The importance of data quality grows exponentially with data complexity. Data profiling features are often included in a data catalog, offering insights into the quality of the data and supporting the early detection of problems. With the data quality monitoring tools that Select Star provides, you can keep your complicated pipelines consistent and dependable.

Final Thoughts

Organizations need to evaluate their documentation as they expand to keep data usable and intact, maintaining the high business value of that data. Just as the Dewey Decimal System might be overkill for a small bookshelf, a catalog might be too much in the early stages of your data maturity.

Organizations should plan for future growth and ensure they move to the next phase as needed because data documentation is more than just a technical necessity—it’s a strategic advantage. This means organizations need to know when to crawl with manual spreadsheets, walk with YAML-based docs, and eventually run with sophisticated data catalogs. Assess the maturity of your documentation today and take the steps needed to improve it according to your organization’s evolving needs.